本系列文章配套代码获取有以下两种途径:

-

通过百度网盘获取:

链接:https://pan.baidu.com/s/1XuxKa9_G00NznvSK0cr5qw?pwd=mnsj提取码:mnsj

-

前往GitHub获取:

https://github.com/returu/PyTorch

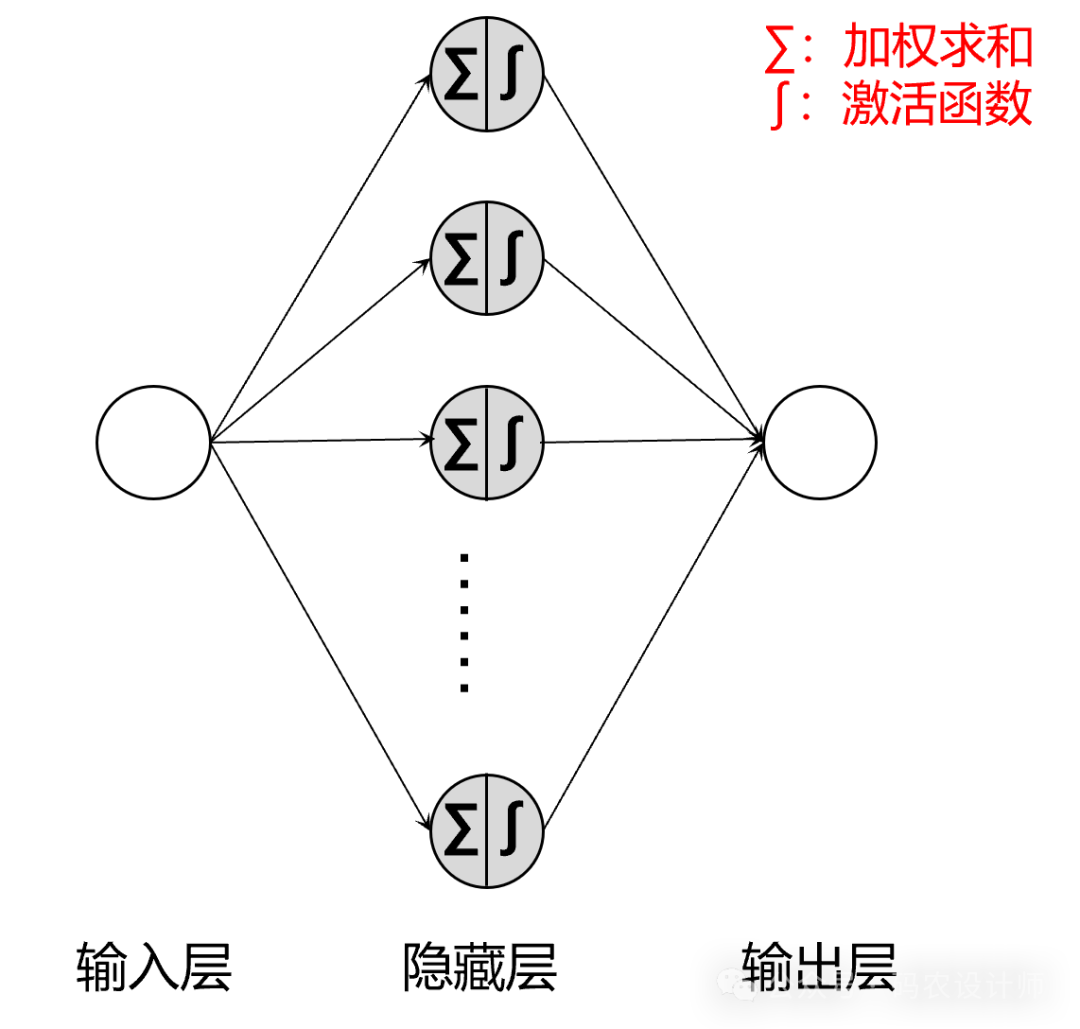

神经网络搭建:

# 神经网络模型

>>> model_1 = nn.Sequential(

... nn.Linear(1,13),

... nn.Tanh(),

... nn.Linear(13,1)

... )

>>> model_1

Sequential(

(0): Linear(in_features=1, out_features=13, bias=True)

(1): Tanh()

(2): Linear(in_features=13, out_features=1, bias=True)

)

>>> for param in model_1.parameters():

... print(param.shape)

...

torch.Size([13, 1])

torch.Size([13])

torch.Size([1, 13])

torch.Size([1])

>>> for name,param in model_1.named_parameters():

... print(name , '-->',param.shape)

...

0.weight --> torch.Size([13, 1])

0.bias --> torch.Size([13])

2.weight --> torch.Size([1, 13])

2.bias --> torch.Size([1])

>>> from collections import OrderedDict

# 神经网络模型

>>> model_2 = nn.Sequential(OrderedDict([

... ('hidden_layer' , nn.Linear(1,13)) ,

... ('hidden_activation' , nn.Tanh()) ,

... ('output_layer' , nn.Linear(13,1))])

... )

>>> model_2

Sequential(

(hidden_layer): Linear(in_features=1, out_features=13, bias=True)

(hidden_activation): Tanh()

(output_layer): Linear(in_features=13, out_features=1, bias=True)

)

>>> for name,param in model_2.named_parameters():

... print(name , '-->',param.shape)

...

hidden_layer.weight --> torch.Size([13, 1])

hidden_layer.bias --> torch.Size([13])

output_layer.weight --> torch.Size([1, 13])

output_layer.bias --> torch.Size([1])

# 通过子模块作为属性来访问一个特定的参数

>>> model_2.hidden_layer.bias

Parameter containing:

tensor([-0.9065, -0.8778, 0.9589, -0.8350, 0.4945, -0.0443, -0.9624, 0.8843,

-0.4794, 0.0036, -0.7114, -0.6724, -0.7271], requires_grad=True)

# 未进行反向传播,因此梯度为None

>>> model_2.hidden_layer.bias.grad

>>> print(model_2.hidden_layer.bias.grad)

None

使用神经网络替换线性模型:

-

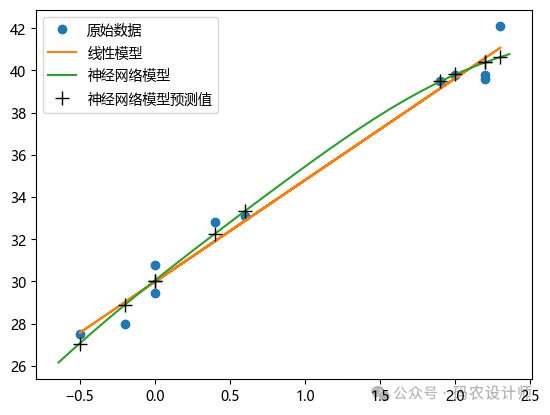

生成数据:

# 设置随机数种子,保证运行结果一致

torch.manual_seed(2024)

def get_fake_data(num):

"""

生成随机数据,y = 0.5 * x +30并加上一些随机噪声

"""

x = torch.randint(low = -5, high=30, size=(num,)).to(dtype=torch.float32)

y = 0.5 * x +30 + torch.randn(num,)

return x,y

x , y = get_fake_data(num=11)

x = x * 0.1

# 增加batch维度

x = x.unsqueeze(1)

y = y.unsqueeze(1)

-

定义函数:

# 神经网络模型

seq_model = nn.Sequential(OrderedDict([

('hidden_layer' , nn.Linear(1,13)) ,

('hidden_activation' , nn.Tanh()) ,

('output_layer' , nn.Linear(13,1))])

)

# 定义损失函数

loss_fn = nn.MSELoss()

# 实例化一个优化器

learning_rate=1e-2

optimizer = torch.optim.SGD(seq_model.parameters() , lr=learning_rate)

-

循环训练,求解参数值:

from IPython import display

# 设置全局字体

plt.rcParams["font.sans-serif"] = "Microsoft YaHei"

def training_loop(n_epochs , optimizer , model , loss_fn , x , y):

# 记录损失的列表

losses = []

for epoch in range(1 , n_epochs+1):

#前向传播

y_p = model(x)

loss = loss_fn(y_p ,y)

# 梯度归零

optimizer.zero_grad()

# 反向传播

loss.backward()

# 参数更新

optimizer.step()

# 记录损失

losses.append(loss.item())

if epoch % 10 ==0:

display.clear_output(wait=True)

# 原始数据

plt.plot(x , y , 'o' , label="原始数据")

# 线性模型

plt.plot(x , (4.827*x+29.977) , label="线性模型")

# 获取xy轴的范围

xmin,xmax,ymin,ymax = plt.axis()

# 输入,模型预测值

plt.plot(torch.arange(xmin , xmax , 0.1).numpy() , model(torch.arange(xmin , xmax , 0.1).unsqueeze(1)).detach().numpy() , '-' , label="神经网络模型")

plt.plot(x.detach().numpy() , y_p.detach().numpy() , 'k+' ,markersize=10 , label="神经网络模型预测值")

plt.legend()

plt.show()

plt.pause(0.5)

return losses

losses = training_loop(n_epochs=200 , optimizer=optimizer , model=seq_model , loss_fn=loss_fn , x=x , y=y)

-

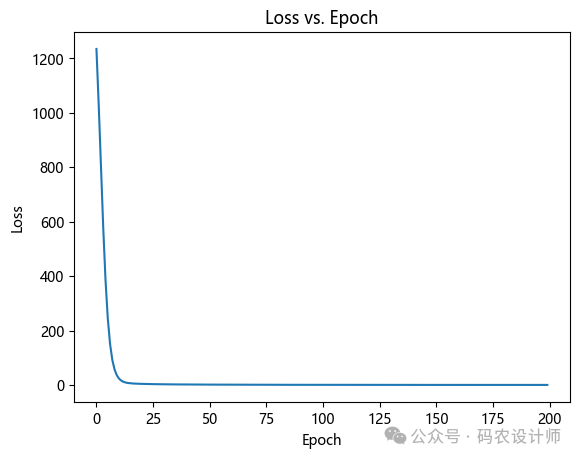

可视化损失:

plt.plot(range(200), losses)

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.title('Loss vs. Epoch')

plt.show()

更多内容可以前往官网查看:

https://pytorch.org/

本篇文章来源于微信公众号: 码农设计师